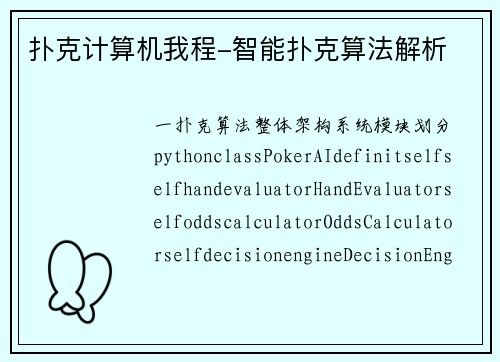

一、扑克算法整体架构

系统模块划分

python

class PokerAI:

def __init__(self):

self.hand_evaluator = HandEvaluator

self.odds_calculator = OddsCalculator

self.decision_engine = DecisionEngine

self.opponent_modeler = OpponentModeler

def make_decision(self, game_state):

综合分析并做出决策

hand_strength = self.evaluate_hand_strength(game_state)

win_probability = self.calculate_win_probability(game_state)

opponent_tendency = self.model_opponents(game_state)

return self.decision_engine.optimize_decision(

hand_strength, win_probability, opponent_tendency, game_state

二、核心算法解析

1. 手牌强度评估算法

python

class HandEvaluator:

def evaluate_preflop(self, hole_cards):

翻前手牌评估

card1, card2 = hole_cards

# 高牌价值

high_card_value = max(card1.rank_value, card2.rank_value)

# 对子检测

is_pair = card1.rank == card2.rank

# 同花潜力

suited = card1.suit == card2.suit

# 连牌潜力

gap_size = abs(card1.rank_value

score = self.calculate_preflop_score(

high_card_value, is_pair, suited, gap_size

return score

def calculate_preflop_score(self, *factors):

综合评分

base_score = 0

# Chen公式简化版

if factors[1]: # 对子

base_score = max(factors[0] * 2, 5)

else:

base_score = factors[0] # 高牌

if factors[2]: # 同花

base_score += 2

# 间隔调整

gap_penalty = factors[3]

base_score -= gap_penalty

return min(base_score, 20) # 归一化到0-20

2. 胜率计算引擎

python

class MonteCarloOddsCalculator:

def calculate_equity(self, hole_cards, community_cards, opponents_count, iterations=10000):

蒙特卡洛模拟计算胜率

wins = 0

ties = 0

for _ in range(iterations):

# 生成未知牌

deck = self.generate_deck(hole_cards, community_cards)

remaining_community = self.draw_remaining_community(deck, community_cards)

# 为对手发牌

opponent_hands = []

for _ in range(opponents_count):

opp_hand = [deck.pop, deck.pop]

opponent_hands.append(opp_hand)

# 完整牌面

悟空德州俱乐部full_board = community_cards + remaining_community

# 比较牌力

my_strength = self.evaluate_hand_strength(hole_cards + full_board)

opp_strengths = [self.evaluate_hand_strength(hand + full_board)

for hand in opponent_hands]

if all(my_strength > opp_strength for opp_strength in opp_strengths):

wins += 1

elif any(my_strength == opp_strength for opp_strength in opp_strengths):

ties += 1

equity = (wins + ties/2) / iterations

return equity

3. 决策 决策优化算法

python

class DecisionEngine:

def __init__(self):

self.strategy_matrix = self.load_strategy_matrix

def optimize_bet_sizing(self, game_state, hand_strength):

优化下注尺度

pot_size = game_state.pot_size

stack_depth = game_state.stack / pot_size

# 基于手牌强度和筹码深度的下注尺度

if hand_strength > 0.8: # 强牌

if stack_depth

return pot_size * 0.75 # 标准下注

else:

return pot_size * 0.6 # 小一点建立底池

elif hand_strength > 0.4: # 中等牌力

return pot_size * 0.5 # 控制底池

else: # 弱牌或诈唬

return pot_size * 0.7 # 较大的诈唬下注

def calculate_fold_equity(self, bet_size, pot_size, opponent_fold_frequency):

计算弃牌率收益

fold_equity = opponent_fold_frequency * pot_size

risk_amount = bet_size

expected_value = fold_equity

return expected_value

三、机器学习增强策略

神经网络对手建模

python

import torch

import torch.nn as nn

class OpponentModel(nn.Module):

def __init__(self, input_dim=50, hidden_dim=128):

super.__init__

work = nn.Sequential(

nn.Linear(input_dim, hidden_dim),

nn.ReLU,

nn.Linear(hidden_dim, hidden_dim//2),

nn.ReLU,

nn.Linear(hidden_dim//2, 3) # 预测fold/call/raise频率

def forward(self, features):

预测对手行为

return torch.softmax(work(features), dim=-1)

class RLDecisionAgent:

def __init__(self):

self.q_network = self.build_q_network

self.memory = ReplayMemory(10000)

def build_q_network(self):

构建Q-learning网络

return tf.keras.Sequential([

tf.keras.layers.Dense(256, activation='relu', input_shape=(STATE_DIM,)),

tf.keras.layers.Dense(128, activation='relu'),

tf.keras.layers.Dense(64, activation='relu'),

tf.keras.layers.Dense(ACTION_SPACE_SIZE) # fold, call, raise

])

def select_action(self, state, epsilon=0.1):

ε-greedy策略选择动作

if random.random

return random.choice(ACTIONS)

else:

q_values = self.q_network.predict(state[np.newaxis])

return np.argmax(q_values[0])

四、关键性能优化技术

1. 快速手牌评估

c++

// C++优化版本手牌评估

uint32_t evaluate_hand_fast(const Card* cards, int count) {

uint64_t key = generate_hand_key(cards, count);

return lookup_table[key]; // 预计算查找表

2. 并行蒙特卡洛模拟

python

from concurrent.futures import ThreadPoolExecutor

def parallel_monte_carlo(hole_cards, board, opponents, total_sims=100000):

并行化胜率计算

sims_per_thread = total_sims // 4

with ThreadPoolExecutor(max_workers=4) as executor:

futures = [

executor.submit(monte_carlo_simulation,

hole_cards, board, opponents, sims_per_thread)

for _ in range(4)

results = [f.result for f in futures]

return sum(results) / len(results)

五、实际应用建议

开发优先级

1. 基础框架:先实现可靠的规则引擎

2. 数学核心:完善概率计算和决策逻辑

3. 数据驱动:添加机器学习和自适应能力

4. 性能优化:针对实时性要求进行优化

测试验证

python

def test_ai_performance:

AI性能基准测试

test_cases = load_test_hands

correct_decisions = 0

for case in test_cases:

ai_decision = poker_ai.make_decision(case.game_state)

if ai_decision == case.optimal_decision:

correct_decisions += 1

accuracy = correct_decisions / len(test_cases)

print(f"AI决策准确率: {accuracy:.2%}")

这套算法体系结合了传统的博弈论分析和现代的机器学习技术,能够在复杂的扑克游戏中做出接近最优的决策。